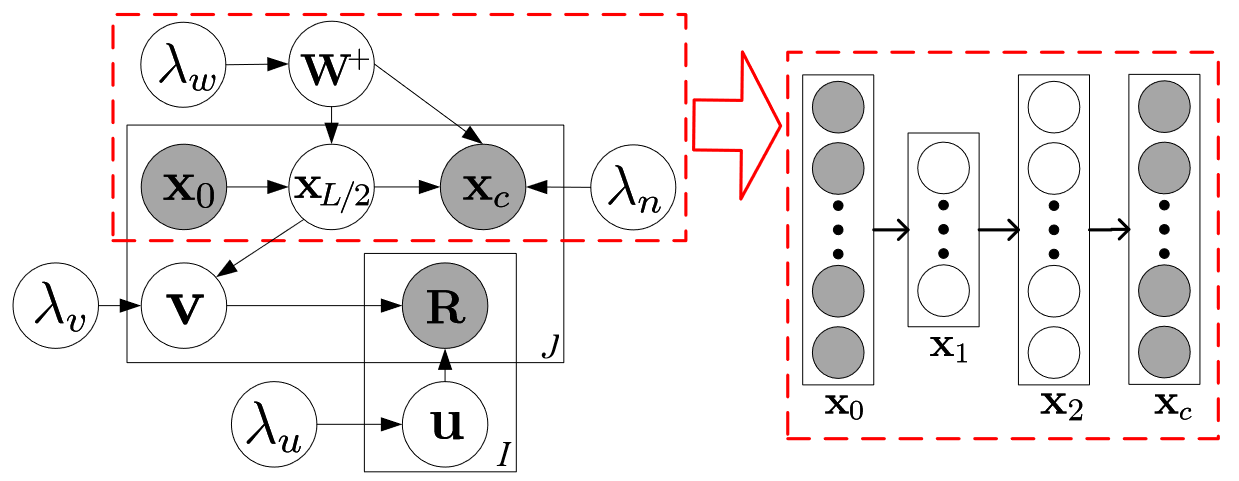

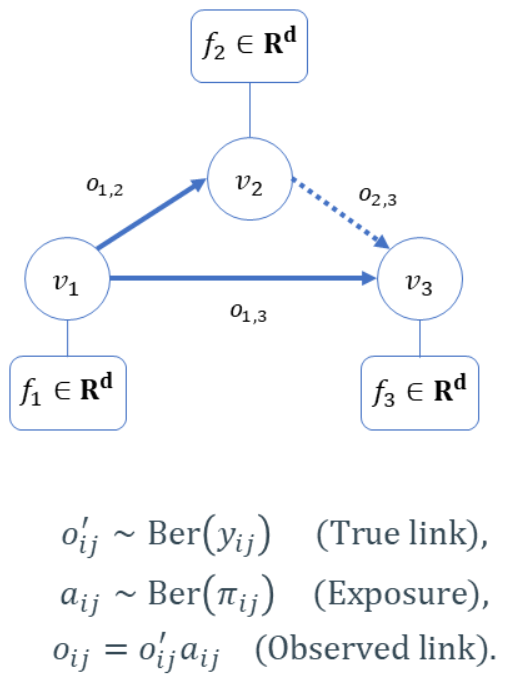

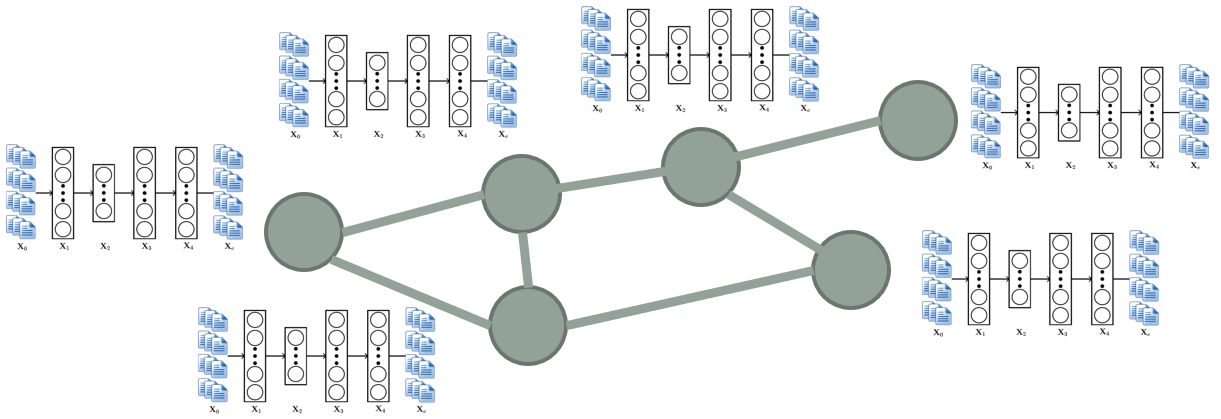

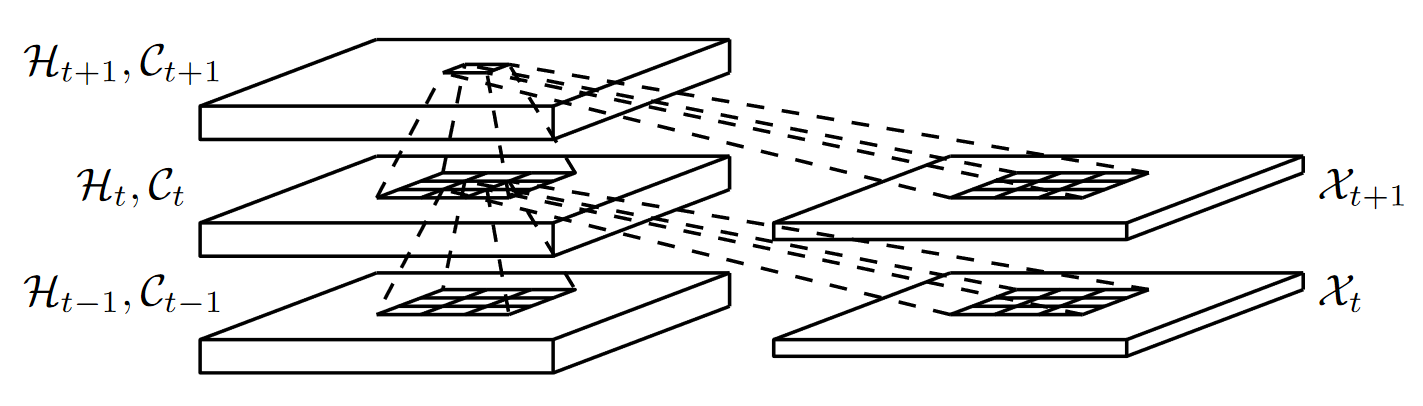

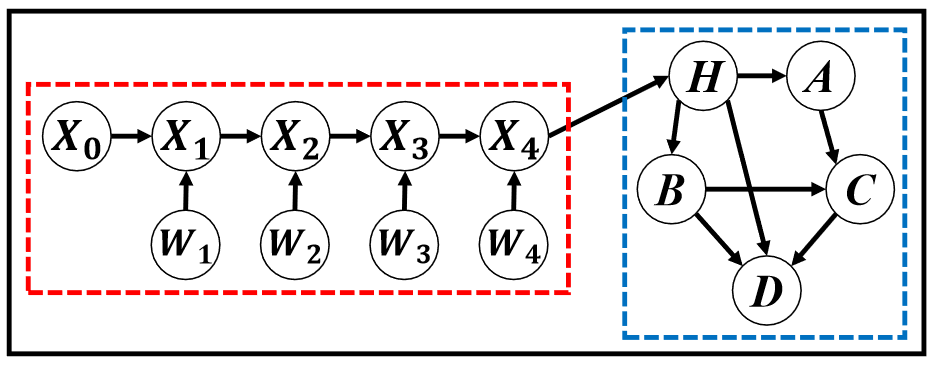

We have developed the first principled probabilistic framework, dubbed Bayesian Deep Learning (BDL), to unify perception in deep learning and reasoning in probabilistic graphical models (arXiv’14, KDD’15, AAAI’15, TKDE’16, ACM Computing Surveys’20, ICLR’23). We pioneered some of its applications on healthcare (ICML’23a, Nature Medicine’22, Nature Medicine’21, AAAI’19a), speech recognition (ICML’21a, ICML’20b, AAAI’19b), recommender systems (NeurIPS’16a, KDD’15, AAAI’15), network analysis (AAAI’17), and computer vision (CVPR’21, ICCV’21a, ICML’23c).